GPT-3 is eating the world!

Large Language Models, a new frontier

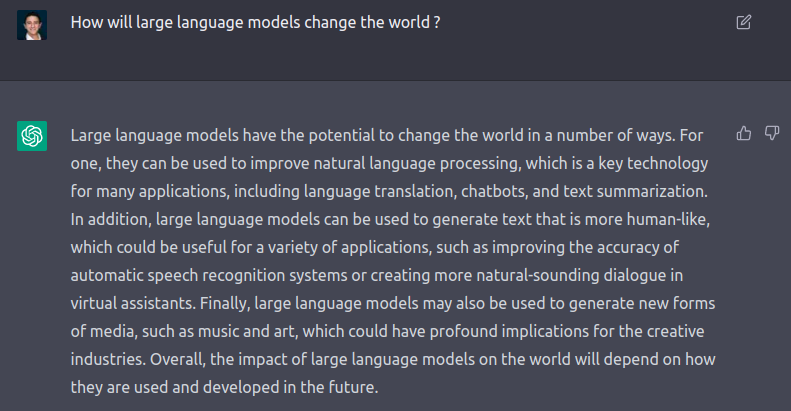

A large language model is a type of artificial intelligence model that is trained to generate human-like text. This is typically done by feeding the model a large amount of text data and then training it to predict the next word in a sequence, based on the words that came before it. The goal of a large language model is to generate text that is coherent and sounds like it was written by a human.

GPT-3 and similar large language models (LLMs) are the building blocks of a whole new generation of software. While a lot of hype surrounded text generation, LLMs are actually capable of way more than that.

Companies are already exploring applications of the technology in a wide range of fields, including machine translation, customer support, legal document analysis, virtual personal assistants, content creation, and more.

Indeed, they are actively discussed in academic circles & deeply explored by companies, as their capabilities are being used to develop more advanced machine learning systems and algorithms. We are now certain their influence will be felt for years (decades?) to come.

What is clear is that LLMs demonstrate that AI has now reached a point where it is capable of handling complex tasks efficiently, all in a non-deterministic way that makes human interaction feel nice. This is a massive breakthrough for the field and will open up exciting new possibilities for better ML-based mainstream technology.

LLMs are everywhere!

GPT-3 is publicly available as an API and can be integrated in any app or software product with minimal cost, without having to manage the underlying infrastructure and code. Here are a few domains it's been successfully used in:

- AI Art: text2image generative models like DALL-E & Stable Diffusion are based on LLMs (they are used to encode text input into a representation that can be used to generate images).

- Writing code: Github Copilot, the code-autosuggestion API that makes developers so much more productive uses the OpenAI Codex model, which itself is based on GPT-3.

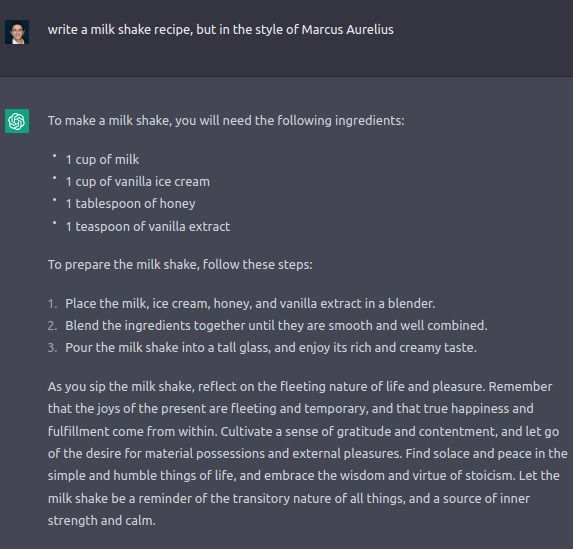

- Literature: They can write books (here’s a list) or help writers with their work, with surprising quality. LEX is a good app for that.

- Web search: Google and Bing both use LLMs on a large scale for their search engines.

- Question answering & chat: I was personally blown away by ChatGPT. Here are some examples, and a link to try it out yourself and be amazed. ChatGPT seems to be a really good search engine too.

A new industry?

LLMs are facing huge demand and becoming a commodity. Demand is mostly driven by incumbents that leverage their existing distribution to enhance their products (Notion's new Notion AI, Microsoft Word's writing assistant, Google Search). Those are fully aware of the advances in AI and driving them and can afford the most R&D spend.

But sometimes, best use cases of LLMs don’t fit cleanly into existing products and require new experiences. If apps need to be significantly edited to accommodate generative AI solutions, they might vacillate (ex: adding image generation to Photoshop vs. a new, specialized product like Runway). And that is how entirely new products like Spellbook, an AI that drafts legal contracts and LEX, a Google Docs clone that helps you write better and faster are born. Here's a bigger list (the "real" list is much bigger and expanding at a crazy pace).

There are also a lot of companies specializing in AI infrastructure and model serving like Hugging Face and GooseAI.

With specialized hardware & serving infrastructure making training and serving LLMs faster and cheaper, we will probably see a cambrian explosion of use cases and products. Also, GPT-4 is on its way 😉

By the way, the most popular models and greatest advances are made by privately held organizations like OpenAI, DeepMind, Stability AI

Here’s my take: LLMs are not just a shiny new thing. Clearly something big is happening.

All in all, LLMs are making the super famous Software 2.0 article (2017) sound way more realistic than a few years ago even if there is still so much to build and explore. Maybe GPT-3 (thanks to Transformers, the underlying ML technology) is machine learning’s killer app we’ve all been waiting for? Apple seems to believe so: they added the Neural Engine, a piece of specialized hardware that makes machine learning models really fast to most of their product line, and they are actively optimizing it for using Transformers (1 2). Why is this a big deal? Because Apple sets the standard on what consumer devices look like.

A Transformer is a very, very good statistical guesser. It wants to know what is coming next in your sentence or phrase or piece of language, or in some cases, piece of music or image or whatever else you’ve fed to the Transformer. I documented [a new AI model] coming out every 3-4 days in March through April 2022. We’ve now got 30, 40, 50 different large language models… We used to say that artificial general intelligence and the replacement of humans would be like 2045. I’m seeing the beginnings of AGI [Artificial general intelligence] right now.

~ Dr Alan D. Thompson for the ABC2. June 2022.

Thanks for reading !